Contributors: Jellejurre, JustSleightly

Designing Scale-Friendly Systems

Scaling in VRChat

VRChat recently added Avatar Scaling to the game. Players can scale their avatar using the hand circle menu between the minimum and maximum. By default, these values are 0.2m and 5m, but these can be set by worlds to be anything from 0.1m to 100m, and worlds can even force avatars to be a certain height. Worlds can also disable scaling if they want, but by default, scaling is enabled.

Avatars that are uploaded with sizes outside of the default size range won’t be scaled by default, but will be set to a value inside of the range when the user uses avatar scaling or when the world forces a certain avatar height. You can’t upload an avatar with a shoulder height lower than 0.1m, but you can upload an avatar with any height above that.

This scaling update may break older systems, which use static offsets in certain places, and aren’t designed to change scale. This article will go over how to design systems or modify systems to work in this new scaling environment.

The general rule for creating scale-friendly systems is that whenever the avatar scales, you want all relative effects to be the same. This means that for example the distance between two objects, or the distance an audio source can play, should scale with avatar height.

It seems that VRChat currently does not late sync scale parameters. All scale related float parameters seem to be set to 1.0 for late joiners. Hopefully this will be fixed soon.

Shader Material Properties

Depending on the shader and what properties you use, some values may be absolute instead of relative, and not scale well with your avatar. Examples include many properties that utilize offsets from the surface/UV, such as vertex offset, point-to-point dissolve start/end values, and geometric dissolve offsets.

You may be able to resolve some of these properties with avatar scale with Scale Friendly Animating.

Constraint Components

Constraint components all respond quite well to scaling, since offsets are relative to constraint target scale. The only exception to this is parent constraint offsets. Their offsets are absolute, and don’t scale with target scale in Unity.

VRChat has implemented a change for this, where in VRChat, parent constraints offsets scale with target scale. This means that parent constraints react differently in Unity and in VRChat.

The latest version of the AV3 Emulator emulates this relative behaviour in unity.

World Constraints

World constraints require a bit more care to work properly with scale, since we don’t want the offset from the world origin to scale (which would move the object), but we do want the object in the container to scale.

To achieve this, you would want to add a Scale constraint to the World Constraint GameObject, with as source the World prefab. This will make it so the object doesn’t move when scaling.

If you want the prop itself to scale with your avatar, you would want to add another scale constraint to the Container GameObject with the source as your avatar.

The latest version of the VRLabs’ World Constraint has the World prefab scale constraint already added. Note that this doesn’t include the prop scaling, you will need to add this yourself if you want it.

Cloth Components

Cloth components scale on their own except for that they need to be disabled and re-enabled for the offsets to be calculated properly. This is currently done in the latest VRChat Open Beta when scaling.

The latest version of the AV3 Emulator on the master branch emulates this cloth toggle behaviour in unit.

Particle Systems

For particle systems to scale, their Scaling Mode needs to be set to Hierarchy Scale. However, even if this property is set, you might need to animate other properties with avatar scale, such as properties involving speed/gravity/velocity. How this is done is elaborated on in the Scale Friendly Animating section.

Physics Components

Some properties of Rigidbodies and Joints may not scale as intended, and may require certain properties (such as limits/velocity) to be scaled along with avatar size. How this is done is elaborated on in the Scale Friendly Animating section.

Audio Sources

These components don’t scale very well either, and will likely require their falloff distance property to be scaled along with avatar size. How this is done is elaborated on in the Scale Friendly Animating section.

Scale Friendly Animating

To animate properties relative to avatar scale, VRC has provided 5 read-only native parameters

ScaleModifiedis a bool that returns True if your Avatar is not your default scale via avatar scalingScaleFactorandScaleFactorInverseare relative values based on your Avatar’s default scale upon upload, which are more effective for quick drop in Direct Blend Tree (DBT) multiplications to modify your existing animation clips made for your current avatarEyeHeightAsMetersandEyeHeightAsPercentare absolute values based on the World’s units that are more effective if you need a more universal solution, such as for prefabs/systems that need to be applied to any avatar at any default size, and may take more effort/math to convert your animation clips to work withEyeHeightAsPercentis the only parameter that is normalized and compatible with state motion time (with values ranging from 0.0 - 1.0), as long as the avatar height is between 0.2m and 5m (which are the default limits)EyeHeightAsMetersis the only one that scales linearly beyond the 5m Avatar scale limit towards the Udon max of 100m

Testing Avatars 3.0 animations can be done using the https://github.com/lyuma/Av3Emulator which can be found as a curated package in the “Manage Projects” section of the VRChat Creator Companion.

Using EyeHeightAsMeters with Direct Blend Trees

This method is one of the most universal ways to make an animation clip scale. Its primary advantage is being compatible with scaling both static and non-static clips, as every keyframe in the animation clip will have it’s value multiplied with scale. Its limitations are that all the values are scaled with your Avatar scale, and that they are scaled linearly. If you have any properties that should not have their values multiplied (such as weights normalized between 0 and 1), you may need to separate those properties out to another animation clip.

If you have a value you want to convert to scale linearly with avatar scale, you can divide this value by your Avatar’s Viewpoint Y value to get the value per meter, and animate this with a Direct Blend Tree (DBT) with blend parameter EyeHeightAsMeters to get a linearly scaling animation.

As an example, say your particle system has a velocity of 3.0 and your avatar has a default viewpoint Y height of 1.5m.

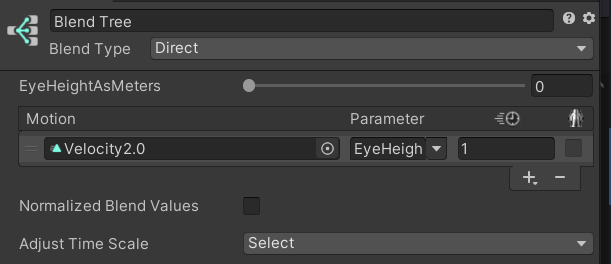

You would then make an Animation Clip with two keyframes, with both frames setting the velocity to 3.0 / 1.5 = 2.0. This animation would be played in a Direct Blend Tree with EyeHeightAsMeters as a direct blend parameter.

In game, say your height is 1.5m, the actual value would then be Value * EyeHeightAsMeters or 2.0 * 1.5 = 3.0, as expected.

If you change your avatar scale to 3.0m (twice as big), the values would be 2.0 * 3.0 = 6.0 (twice as large). Your value now scales linearly with avatar height.

The Direct Blend Tree from the example.

This will work by default for values above 1 meter, but if you want it to work for values below 1 meter, you will need to put everything you’re animating in the clip at 0 when uploading, or you would need a reset layer above this one where you animate the values to 0. In our example, we could either set the particle system velocity to 0 when uploading, or animate it to 0 in a layer above this one.

When mixing a WD On Direct Blend Tree with WD Off states in the same animator layer, you may experience issues if there are any transitions outbound of the DBT with transition duration. This includes AnyState transitions. This behaviour is only observed in VRChat after upload, and not in the native Unity Editor.

Note that, as all Direct Blend Trees, the state this Direct Blend Tree is in should be Write Defaults On, and have the text (WD On) be somewhere in the name of the state. More information on this can be found at the Write Defaults page.

Using EyeHeightAsPercent with Motion Time

This method’s primary advantage is being able to mix non-scaling and scaling properties together in the same animation clip, as well as being able to scale non-linearly if necessary, all without needing a Blend Tree. Its limitation is that it is only compatible with static animation clips that do not actively change values when the state is entered, because the use of Motion Time normalizes the duration of the animation clip. This is also limited to the scalable range of Avatars, and may cause unintended behaviour if worlds enforce scaling beyond the avatar scale maximum of 5 meters.

If you have a value you want to convert to scale linearly with avatar scale, you can divide this value by your Avatar’s Viewpoint Y value to get the value per meter (unit value). Afterwards, divide your unit value by 5 to get the value of the first keyframe of your new scale-friendly animation clip, and multiply your unit value by 5 to get the value of the last keyframe of your new animation clip. You can then add this animation clip to a state with motion time parameter EyeHeightAsPercent to get a linearly scaling animation.

For Shader Developers

If you are writing any shaders for your system, it is possible you may need to take scale into account for specific effects.

While animating a value on the material is always an option, if the shader is applied to a non-skinned mesh renderer, it is also possible to get the object scale directly in the shader like so:

float3 worldScale = float3(

length(float3(unity_ObjectToWorld[0].x, unity_ObjectToWorld[1].x, unity_ObjectToWorld[2].x)), // scale x axis

length(float3(unity_ObjectToWorld[0].y, unity_ObjectToWorld[1].y, unity_ObjectToWorld[2].y)), // scale y axis

length(float3(unity_ObjectToWorld[0].z, unity_ObjectToWorld[1].z, unity_ObjectToWorld[2].z)) // scale z axis

);